Reading Time: 4 minutes

As learning analytics continues to rise up the agenda in the corporate learning & development (L&D) sector, one thing is becoming glaringly apparent: we should not expect a one-size-fits-all, off-the-shelf approach to learning analytics. This is a specialist discipline that cannot be bottled up into a single product. Sure, there are products such as Knewton, a Product as a Service platform used to power other peoples’ tools. There are also LMS bolt-ons like Desire2Learn Insights or Blackboard Analytics but even they are not sold as off-the-shelf products, for example the Blackboard team “tailors each solution to your unique institutional profile”. There are just far too many organisational factors at play for an L&D practitioner to be able to implement a learning analytics programme using an off-the-shelf tool.

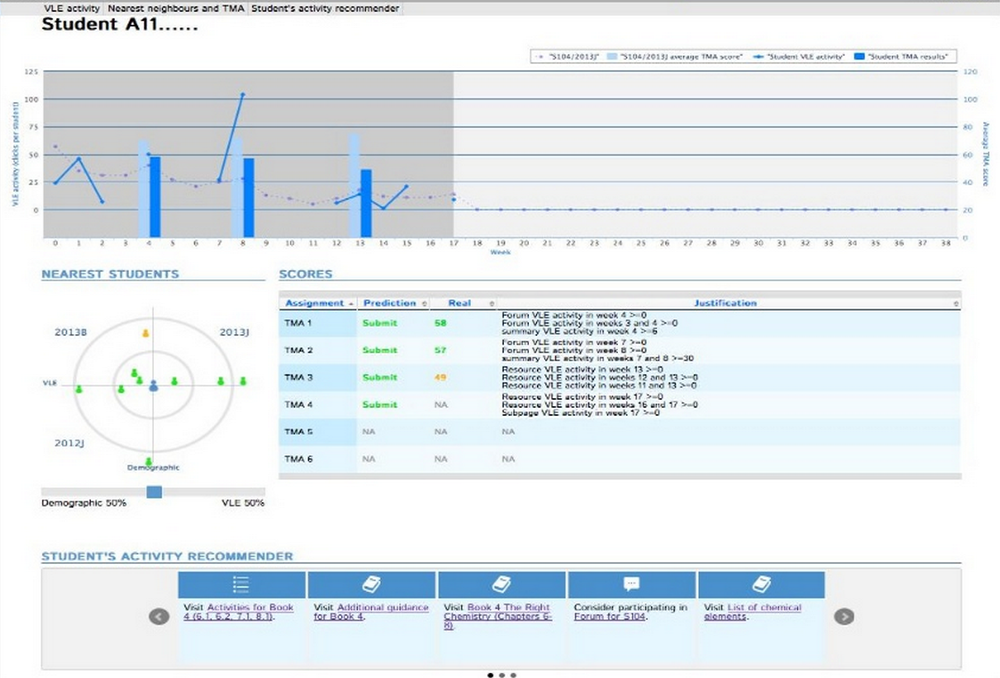

What a learning analytics platform looks like

An example of one platform (not a commercially available product but probably the most advanced learning analytics platform I’ve yet seen) is the Open University’s OU Analyse platform. They demonstrated this at MoodleMoot UK and Ireland recently. The product is very geared to the OU’s own Moodle-based VLE and as such is built to answer their own questions. This predictive analytics platform analyses demographic and course data from their own VLE with a view to predicting which students are likely to fail. Tutors have a login to the system and can use the dashboard tools to determine which learning interventions to recommend to a student in order to get them back on a path to success.

This requires manual intervention by the tutor at present, but the aim is to put the activity recommender directly in front of students. This could be presented via the student’s VLE homepage or via email notifications, for example. Some further work needs to be done here in order to undertake research and provide evidence that the recommended actions do indeed lead to improved learner performance, but the OU are well on the way to doing something genuinely powerful in the learning analytics space.

Why every learning analytics platform will be different

As more learning analytics tools reach the market, it is important to understand that you cannot just plug this sort of system into any academic VLE or corporate LMS and expect it to deliver nuggets of gold. As I’ve blogged before, good analytics starts with determining the right question. In the OU’s case this is, ‘how do we identify at-risk students and deliver appropriate support to them?’. In a corporate context the question could be, ‘how do we identify low-performing staff and deliver appropriate support to them’ or, ‘what learning path will most likely result in career progression for any member of staff’. The question each organisation asks will determine the design of their learning analytics effort, their predictive models and their dashboard presentations.

Another major factor affecting the design of your learning analytics programme will be the pedagogic models used in your organisation. For example, OU Analyse is designed around the standard OU curriculum (familiar to many in the HE sector) of repeating time-dependent units consisting mainly of reading, video and knowledge checks building to an assignment. The average corporate learning model is very different indeed. We know from decades of research that only about 10% of workplace learning is via the formal, structured course. The remaining 90% comprises a mix of social and informal learning, which will happen inside and outside the LMS. I designed an infographic to illustrate this for MoodleMoot earlier this month (the infographic also contains links to that workplace learning research, if you’re interested).

The pedagogic model has a significant effect on the predictive models used in your analytics programme. In the OU’s case, their particular pedagogic model and the objective of their analytics effort resulted in four predictive models being implemented:

- Bayesian classifier

- Classification and regression tree (CART)

- k Nearest Neighbours (k-NN) with demographic/static data

- k-NN with VLE data.

There is more detailed information on these in their Slideshare.

Your learning analytics platform will be built by data scientists, not learning experts

Now I’m not even going to pretend to understand how those predictive models all work, but I included them to demonstrate just how quickly your average corporate L&D practitioner is going to get way out of their depth with this stuff. And buying an off-the-shelf analytics product is not going to help with that. While technology products do exist to analyse datasets and output understandable and actionable data to lovely looking dashboards, they cannot exist in isolation from the specialist expertise needed to implement them. You still need a data scientist to perform a highly specialised set of tasks including:

- determining the exact problem that needs answering

- evaluating the pedagogic models and mapping the learning design

- identifying the data sources and merging data

- formatting and cleansing the merged data

- identifying the correct predictive models to use on the data

- interpreting the results and building custom dashboard components

- measuring the resulting changes in learner performance or behaviour

- refining the predictive models, measuring the results again, and repeating!

Clearly some of these items require close interaction with the L&D team, but in summary there is a real need to bring experienced data scientists into corporate learning and development, not just to set up analytics programmes but to continually monitor, review and refine the results. And it cuts both ways, as those specialists also need to grow their understanding of pedagogic design to build effective learning analytics programmes. Right now there aren’t many people with the required skillsets, but these two worlds are coming together fast.

Pingback: Learning analytics is too important for L&D to own | Barry Sampson

Some interesting thoughts coming in via other channels. Niall Sclater commented on Twitter that hopefully some common ground will evolve between institutions so that some basic off the shelf products become viable. Sounds sensible, maybe we’ll see this in the corporate space too once the practice starts to become a bit more embedded.

//platform.twitter.com/widgets.js

Also a followup blog with pingback posted over on Barry Sampson’s blog, concluding that probably we don’t want to keep this work inside the L&D department. As L&D becomes more strategic within organisations, we need to move these tools and processes OUT of L&D into the wider business. The conversation on Barry’s blog rightly leads into whether Business Intelligence units are the better place to lead on these initiatives. xAPI could also have a role to play in data formatting and collection.

Pingback: Learn xAPI MOOC – Week 4 Reflections | Barry Sampson